In today’s fast-paced digital landscape, system failures can cripple operations, drain resources, and damage reputation. That’s why mastering severity-based failure ranking is no longer optional—it’s essential.

🎯 Why Traditional Failure Management Falls Short

Organizations worldwide face a common challenge: not all system failures are created equal. Yet many teams still treat every bug report, system error, and performance issue with the same urgency. This scattershot approach leads to wasted resources, burned-out teams, and critical issues slipping through the cracks while minor glitches consume valuable time.

The reality is stark. According to industry research, companies lose an average of $5,600 per minute during system downtime. When teams can’t distinguish between a catastrophic failure threatening customer data and a cosmetic UI glitch, they risk everything. The solution lies in implementing a robust severity-based failure ranking system that transforms chaos into clarity.

Understanding the Foundation of Severity-Based Ranking

Severity-based failure ranking is a systematic approach to categorizing and prioritizing system failures based on their impact on business operations, user experience, and overall system integrity. This methodology creates a structured framework that empowers teams to make informed decisions about resource allocation and response strategies.

At its core, this system recognizes that different failures require different response levels. A complete system outage affecting thousands of users demands immediate all-hands-on-deck attention, while a minor visual inconsistency on a rarely-used feature can wait for the next sprint cycle.

The Four Pillars of Effective Severity Classification

Building a robust severity-based ranking system requires understanding four fundamental pillars that define failure impact:

- Business Impact: How does this failure affect revenue, operations, or strategic objectives?

- User Experience: What is the scope and intensity of disruption to end-users?

- System Integrity: Does this failure compromise data security, system stability, or compliance requirements?

- Workaround Availability: Can users or operators bypass the issue while a permanent fix is developed?

📊 Establishing Your Severity Level Framework

Creating a practical severity classification system requires clear definitions that everyone in your organization can understand and apply consistently. Here’s a comprehensive framework used by leading technology organizations:

| Severity Level | Response Time | Characteristics | Examples |

|---|---|---|---|

| Critical (P0) | Immediate | Complete system outage, data loss risk, security breach | Production database failure, payment system down, data breach |

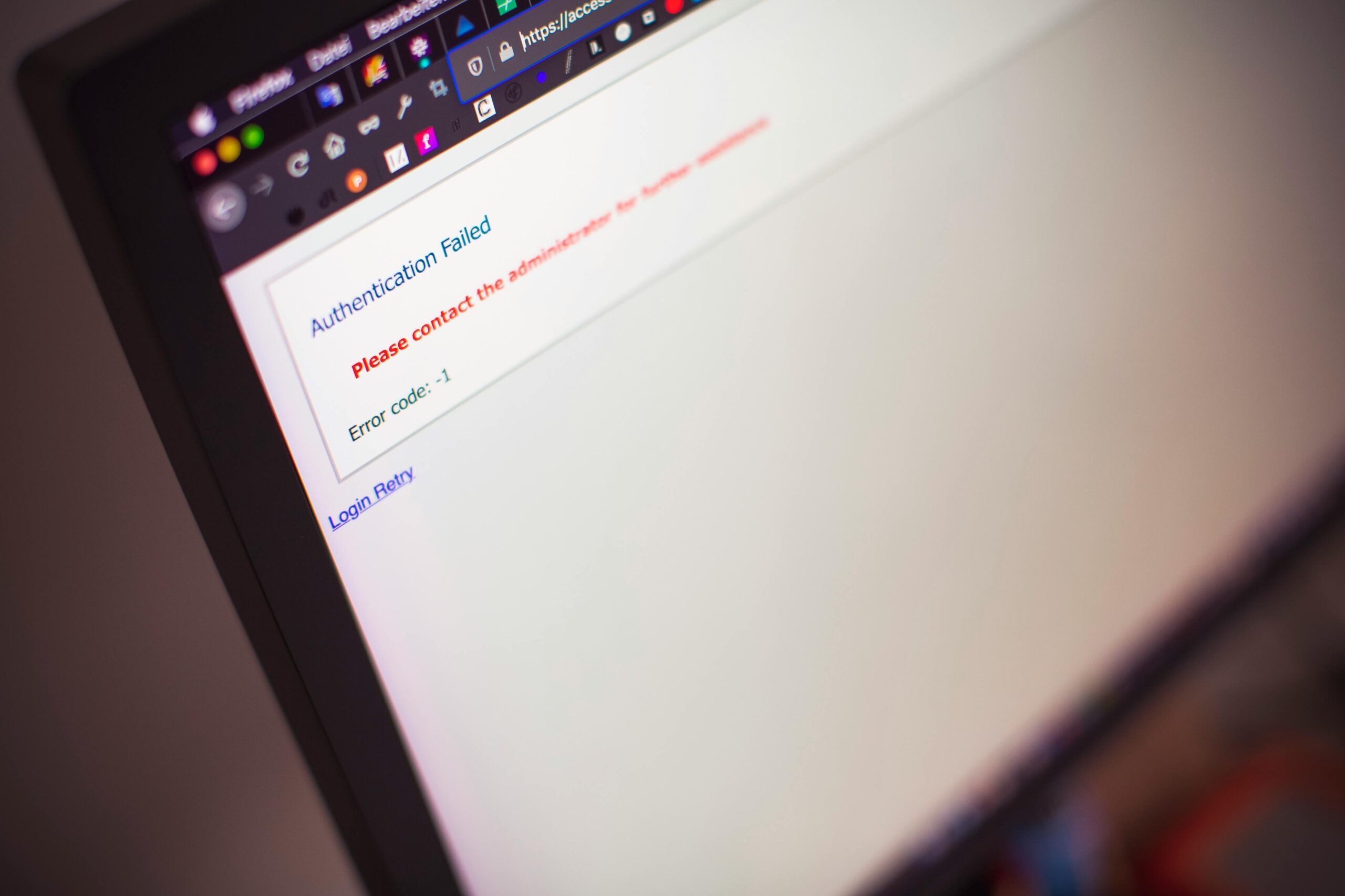

| High (P1) | Within 4 hours | Major functionality unavailable, significant user impact | Login system failure, core feature broken, performance degradation |

| Medium (P2) | Within 24 hours | Moderate impact, workaround available, limited user scope | Non-critical feature malfunction, minor data sync issues |

| Low (P3) | Next sprint cycle | Minimal impact, cosmetic issues, feature requests | UI inconsistencies, documentation errors, minor enhancements |

Implementing Severity-Based Ranking in Your Organization

Theory means nothing without practical implementation. Transforming your failure management approach requires a methodical rollout that considers people, processes, and technology. The following steps provide a roadmap for successful adoption.

Step One: Secure Stakeholder Buy-In

Change management begins at the top. Present compelling data to leadership showing the cost of current inefficiencies versus the benefits of structured prioritization. Calculate the financial impact of misallocated resources and demonstrate how severity-based ranking reduces mean time to resolution for critical issues while optimizing team productivity.

Include representatives from development, operations, customer support, and business units in planning discussions. Each perspective adds valuable insight into what constitutes severity for different failure types.

Step Two: Define Clear Escalation Protocols

Your severity framework is only as effective as your response protocols. Document specific actions for each severity level, including who gets notified, what resources are mobilized, and what communication channels activate.

For critical P0 incidents, your protocol might include immediate notification of on-call engineers, automatic activation of war room protocols, and executive-level communication within 30 minutes. Lower-severity issues follow proportionally scaled responses that conserve resources while maintaining service quality.

Step Three: Leverage Technology for Automation

Manual severity assessment creates bottlenecks and introduces inconsistency. Modern incident management platforms can automatically classify many failures based on predefined rules, system telemetry, and machine learning models trained on historical data.

Implement monitoring systems that detect failure patterns and assign preliminary severity ratings. Configure automated alerts that route issues to appropriate teams based on classification. This automation accelerates response times while freeing human judgment for complex edge cases requiring nuanced evaluation.

💡 Advanced Strategies for Severity Assessment

Once your basic framework is operational, advanced techniques can further refine your failure ranking accuracy and effectiveness.

Dynamic Severity Adjustment

Severity isn’t always static. A medium-severity issue affecting 50 users becomes critical when it suddenly impacts 50,000. Implement dynamic reassessment that monitors failure scope, duration, and emerging patterns. Build triggers that automatically escalate issues when thresholds are exceeded.

Consider temporal factors too. A payment processing glitch has different severity at 3 AM versus during peak shopping hours. Your system should account for these contextual variables when assigning priority.

Cascading Failure Recognition

Individual failures rarely exist in isolation. What appears as a low-severity logging issue might actually signal an emerging critical database problem. Train your team to recognize cascading failure patterns and implement correlation tools that identify related incidents.

Machine learning algorithms excel at pattern recognition across complex systems. These tools can flag seemingly minor issues that historically preceded major outages, enabling preemptive action before small problems snowball into catastrophes.

The Human Element: Training and Culture

Technology and processes only succeed when supported by organizational culture and trained personnel. Building a severity-conscious culture requires ongoing investment in education and reinforcement.

Comprehensive Training Programs

Every team member who might report or triage failures needs thorough training on your severity framework. Create realistic scenarios that challenge participants to classify various failure types. Use case studies from your actual incident history to illustrate decision-making principles.

Conduct regular refresher sessions and update training materials as your framework evolves. Make severity assessment guidelines easily accessible through internal documentation systems, quick-reference cards, and integrated help within your incident management tools.

Fostering Accountability Without Blame

Effective severity-based systems thrive in blameless cultures where reporting failures is encouraged rather than punished. When team members fear consequences for acknowledging problems, they delay reporting or downplay severity—both catastrophic to effective incident management.

Implement post-incident reviews focused on system improvement rather than individual fault-finding. Celebrate catches of potential critical issues before they impact users. Recognize team members who accurately assess severity even when that means escalating uncomfortable situations.

🔍 Measuring Success and Continuous Improvement

What gets measured gets managed. Establish key performance indicators that track the effectiveness of your severity-based ranking system and identify improvement opportunities.

Essential Metrics to Monitor

- Mean Time to Detection (MTTD): How quickly failures are identified and classified

- Mean Time to Resolution (MTTR): Average resolution time by severity level

- Severity Classification Accuracy: Percentage of issues correctly classified on first assessment

- Escalation Rate: Frequency of severity level changes after initial classification

- Resource Allocation Efficiency: Engineering hours spent per severity category

- False Positive Rate: Incidents classified as critical that didn’t warrant that designation

Analyze these metrics monthly and trend them quarterly. Look for patterns indicating training needs, process gaps, or system limitations requiring attention.

The Feedback Loop

Your severity framework should evolve based on real-world performance. Establish regular review cycles where teams assess whether current severity definitions still align with business realities. As your organization grows, enters new markets, or launches new products, impact assessments must adjust accordingly.

Solicit feedback from all stakeholders—engineers dealing with technical debt from delayed low-severity fixes, support teams managing customer expectations during incidents, and executives balancing risk against development velocity.

Preventing System Breakdowns Through Predictive Analysis

The ultimate goal of severity-based ranking extends beyond reactive incident management. When properly implemented, your failure classification data becomes a powerful tool for predictive prevention.

Pattern Recognition for Proactive Prevention

Analyze your historical failure data to identify patterns that precede critical incidents. Do certain low-severity errors consistently appear before major outages? Does failure frequency in specific components correlate with upcoming systemic issues?

Build predictive models that flag concerning patterns before they escalate. When your system detects the early warning signs of previous critical failures, proactive intervention can prevent the breakdown entirely—transforming your approach from reactive firefighting to strategic prevention.

Strategic Resource Planning

Historical severity data informs intelligent resource allocation. If analytics show that authentication systems generate the most critical failures, justify increased investment in that area. When certain components consistently produce only low-severity issues, optimize rather than over-engineer those elements.

Use failure pattern analysis to guide technical debt prioritization, infrastructure investments, and team skill development. This data-driven approach ensures resources flow to areas generating maximum risk reduction.

🚀 Real-World Success Stories

Organizations implementing rigorous severity-based ranking systems report transformative results. A major e-commerce platform reduced critical incident response time by 73% within six months of implementation. A financial services company decreased customer-impacting failures by 58% year-over-year after adopting predictive severity analysis.

These successes share common characteristics: executive support, comprehensive training, appropriate tooling, and continuous refinement based on operational feedback. They prove that severity-based failure ranking isn’t just theoretical best practice—it’s a practical framework delivering measurable business value.

Taking Action: Your Path Forward

Mastering efficiency through severity-based failure ranking isn’t an overnight transformation. It’s a journey requiring commitment, investment, and persistence. Start small with a pilot team or single system, prove the concept, then expand systematically across your organization.

Begin by auditing your current failure management process. How are issues prioritized today? What inefficiencies exist? Where do critical failures slip through while resources focus on trivial issues? Use this baseline assessment to build your business case and measure future improvement.

Document your severity framework with crystal clarity. Ambiguity undermines consistency, so invest time creating detailed definitions, examples, and decision trees. Make this documentation living, accessible, and regularly updated.

Implement supportive technology, but remember tools serve your process—not the reverse. Choose solutions that integrate with existing workflows, provide flexibility for your unique requirements, and scale as your needs evolve.

The Competitive Advantage of Operational Excellence

In markets where milliseconds matter and users have countless alternatives, operational excellence isn’t optional. Your ability to prevent breakdowns, respond effectively when failures occur, and continuously improve system reliability directly impacts customer trust, revenue, and market position.

Severity-based failure ranking transforms chaotic incident management into strategic advantage. It ensures your best engineers focus on your biggest challenges. It accelerates resolution of truly critical issues while preventing resource waste on trivial problems. It builds organizational resilience through systematic learning from every failure.

Most importantly, it shifts your organization from reactive crisis management to proactive system optimization. When you understand failure patterns, predict emerging issues, and allocate resources strategically, you’re not just managing breakdowns—you’re preventing them.

The power is in your hands. Every system failure contains lessons waiting to be learned, patterns waiting to be recognized, and prevention opportunities waiting to be seized. By mastering severity-based failure ranking, you unlock that power and transform potential disasters into stepping stones toward unshakeable reliability.

Your systems deserve better than one-size-fits-all incident management. Your teams deserve clear priorities and effective processes. Your users deserve reliable, robust experiences. Severity-based failure ranking delivers all three, turning efficiency from aspiration into operational reality. The question isn’t whether you can afford to implement this approach—it’s whether you can afford not to.

Toni Santos is a systems reliability researcher and technical ethnographer specializing in the study of failure classification systems, human–machine interaction limits, and the foundational practices embedded in mainframe debugging and reliability engineering origins. Through an interdisciplinary and engineering-focused lens, Toni investigates how humanity has encoded resilience, tolerance, and safety into technological systems — across industries, architectures, and critical infrastructures. His work is grounded in a fascination with systems not only as mechanisms, but as carriers of hidden failure modes. From mainframe debugging practices to interaction limits and failure taxonomy structures, Toni uncovers the analytical and diagnostic tools through which engineers preserved their understanding of the machine-human boundary. With a background in reliability semiotics and computing history, Toni blends systems analysis with archival research to reveal how machines were used to shape safety, transmit operational memory, and encode fault-tolerant knowledge. As the creative mind behind Arivexon, Toni curates illustrated taxonomies, speculative failure studies, and diagnostic interpretations that revive the deep technical ties between hardware, fault logs, and forgotten engineering science. His work is a tribute to: The foundational discipline of Reliability Engineering Origins The rigorous methods of Mainframe Debugging Practices and Procedures The operational boundaries of Human–Machine Interaction Limits The structured taxonomy language of Failure Classification Systems and Models Whether you're a systems historian, reliability researcher, or curious explorer of forgotten engineering wisdom, Toni invites you to explore the hidden roots of fault-tolerant knowledge — one log, one trace, one failure at a time.